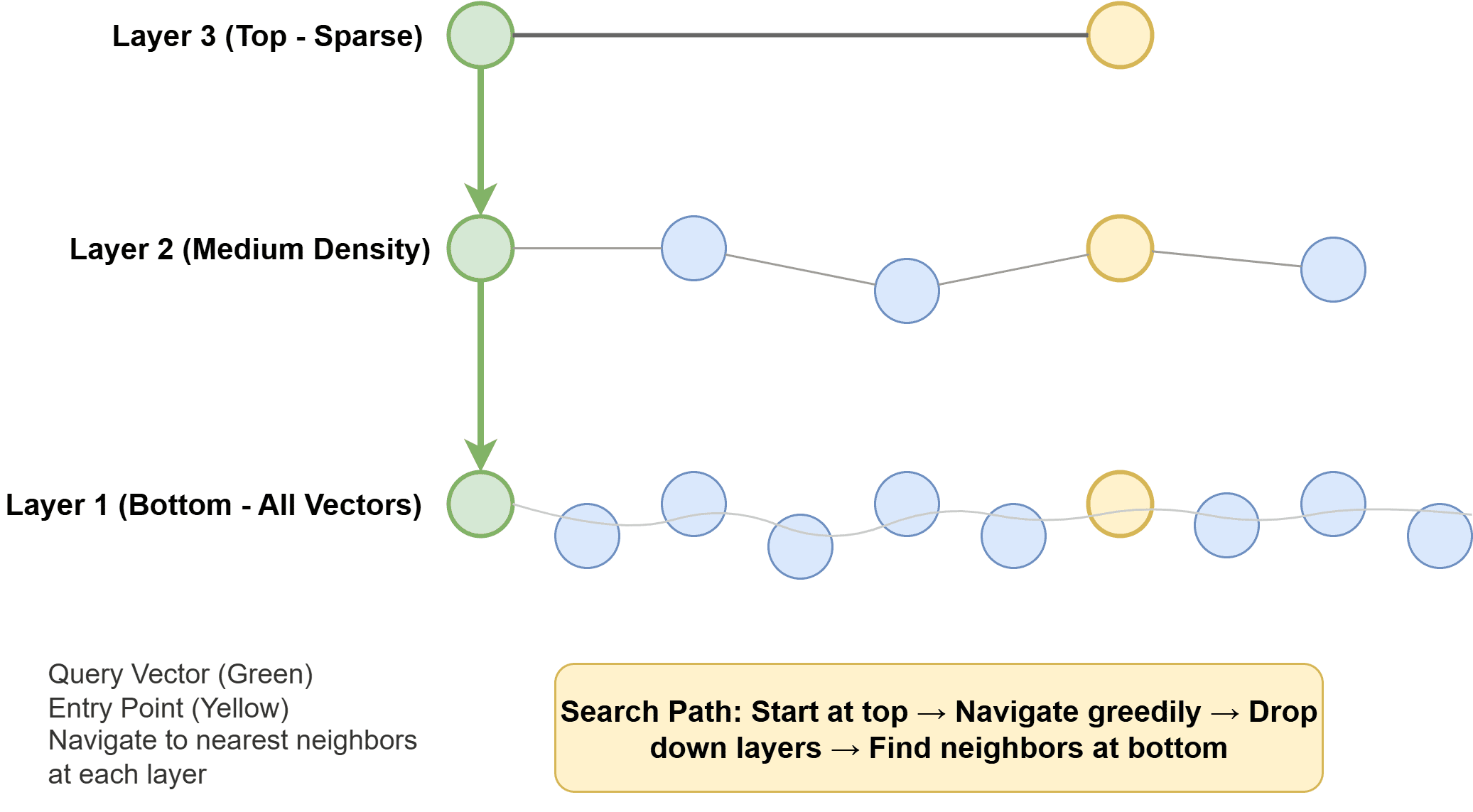

Hierarchical Navigable Small World (HNSW)

Hierarchical Navigable Small World (HNSW) builds a multi-layer graph structure where each layer contains a subset of vectors connected by edges. The top layer is sparse, containing only a few well-distributed vectors. Each lower layer adds more vectors and connections, with the bottom layer containing all vectors.

Search starts at the top layer and greedily navigates to the nearest neighbor. Once it can’t find anything closer, it moves down a layer and repeats. This continues until reaching the bottom layer, which returns the final nearest neighbors.

Hierarchical Navigable Small World (HNSW) | Image by Author

Inverted File Index (IVF)

Inverted File Index (IVF) partitions the vector space into regions using clustering algorithms like K-means. During indexing, each vector is assigned to its nearest cluster centroid. During search, you first identify the most relevant clusters, then search only within those clusters.

IVF: Partitioning Vector Space into Clusters | Image by Author

Product Quantization (PQ)

Product quantization compresses vectors to reduce memory usage and speed up distance calculations. It splits each vector into subvectors, then clusters each subspace independently. During indexing, vectors are represented as sequences of cluster IDs rather than raw floats.

Product Quantization: Compressing High-Dimensional Vectors | Image by Author

https://machinelearningmastery.com/the-complete-guide-to-vector-databases-for-machine-learning/

'12. 메일진' 카테고리의 다른 글

| 2025 - MAD Landscape (0) | 2025.11.14 |

|---|---|

| Beyond Standard LLMs (표준 LLM을 넘어서) (0) | 2025.11.05 |

| ITFIND 메일진 제1235호 산업분야별 정보메일 (발행 : 2025-10-17 ) (0) | 2025.11.02 |

| 인공지능 - 인공지능 에이전트 (Agent) - 피지컬 AI (Physical AI) (2) | 2025.11.02 |

| ITFIND 메일진 제1237호 산업분야별 정보메일 (발행 : 2025-10-31 ) (1) | 2025.11.02 |